Look, sometimes you make bets early on in the process and they don't pan out. It happens! I've wanted to write this post since the first time I did this sort of work, as I remember how much I wish I had somebody who'd done this sort of job to give me guidance.

In this post

I'll speak about an instance where I did a few weeks of work I did in order to merge two AWS regions' worth of infrastructure together, including all of the data we held, with less than 60 seconds of downtime. I'll tell you what we used, what was messy, and what we learned. This post will likely be of interest to you if:

- You're about to make this sort of decision and want to see what might come later

- You made the same decision we did and are now facing the same fork in the road

- Are interested in some of the technologies that enable this sort of shift

- Honestly? Schaudenfraude.

The Decision

Like every engineer, at some point I found myself faced with the consequences of an early architectural decision to allow us to scale. The team I was working with was small, and had to make a lot of decisions quickly in order to keep releasing features, keep those pesky stakeholders happy, and not get buried in a quagmire of analysis paralysis.

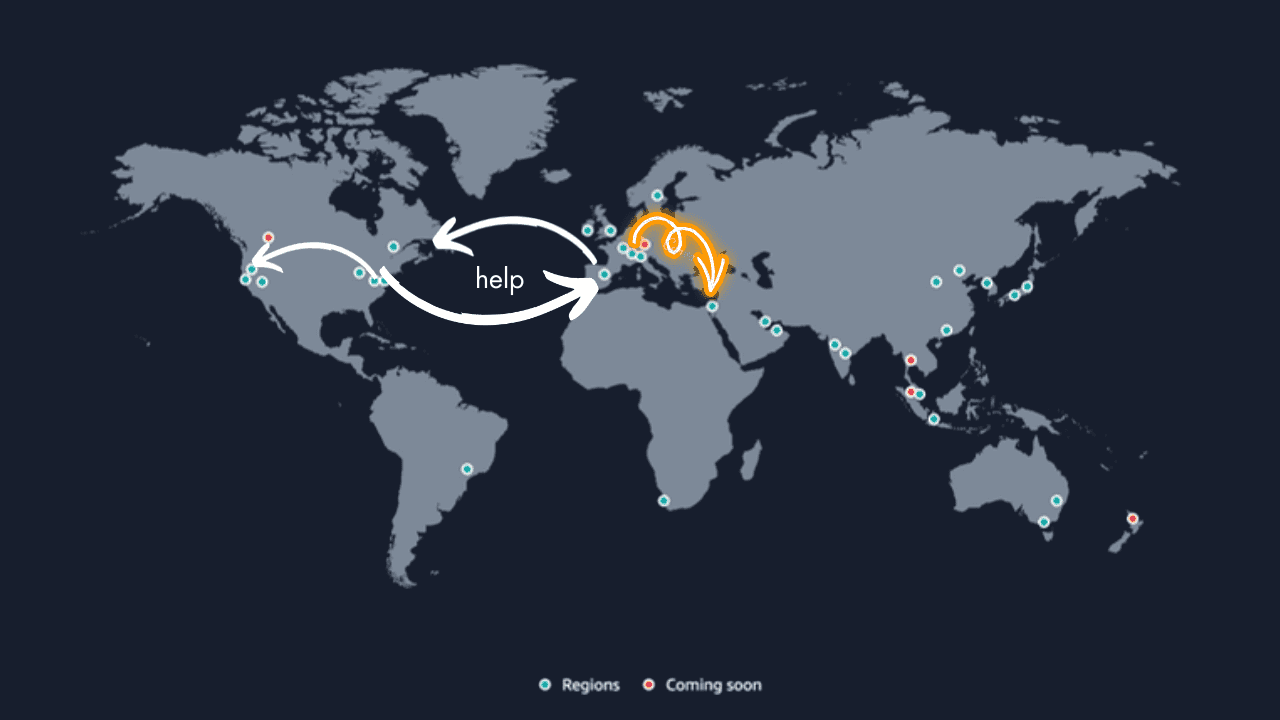

One such decision happened when we expanded outside of the UK. In this instance, it was quicker to spin up a relatively identical version of our infrastructure in the new market, and that also helped with some business logic elements of the work we were doing. To be clear - it made the most sense at the time given the shape of the team, the state of the product, and the time available to us.

Unfortunately, a year or so after I'd started working with this team, we were seeing problems with this split. Aside from the obvious cost of running a decently sized RDS instance and associated ECS clusters month-on-month, there was also all of the operational considerations that come with running more than one stack in isolation. Things came to a head when there was a product decision that ultimately required we could pull all of our data into one place, instead of just per region, and the strongest option was to merge the two regions together.

The Stack

In each region we had:

- ECS running our APIs

- An S3 bucket for user content (at this time, no Cloudfront)

- A decently sized MySQL RDS instance in each region, with read replicas to take the load off of the primary instance during peak times

- Redis instances to cache some useful data during peak hours

It's nothing too complex, I'll admit

Approaching the problem

Before we actually built anything, we had to do a fair bit of planning. If you're just interested in how we built it then skip past this bit.

Where are we, where do we want to be?

It was important to stop and understand the size and goal of the task. Spinning up the infrastructure wasn't too much of a problem - we had 99% of our estate in Terraform. The problem was, we also had several hundred thousand users, a few million bits of user generated content, and tens of millions of user interactions, split across two regions.

We decided that we wanted to move into an entirely new region, distinct from the two previous (mostly as it afforded us a bit of distance from us-east-1, and also put us in a region that gets new releases from AWS much faster, but also gave additional governance cover and a few other things I won't talk about)

We also decided early on what was acceptable for such a shift for things that helped form the equivalent of 'acceptance criteria'. Things like:

- Acceptable downtime before we revert

- Acceptable data lag / inconsistency during migration

- All the unhappy paths a user might face before, during, and after implementation, and what we were willing to accept in each case (for instance, a smattering of users needing to log back in, versus 25% of our user base)

Step 2 - crowdsourcing information

As I wrote at the beginning, one of the reasons I've wanted to write this post is because of how much I wish I had somebody employed with me saying'yeah I've done exactly this before' as we tackled it together, but I won't deny how rewarding it was to plan and execute such a big move mostly by myself (although I doubt I'd be as positive had it gone wrong!)

In our planning, we grabbed our AWS Account Manager (which I always recommend when you're planning something big / new), and got them to put us in touch with a couple of solutions architects. I also spoke to anybody and everybody I know to see if they'd done anything similar (which a few had - if you're reading this then thank you)

I really can't overstate the benefits of reaching out to people for help. With all the love and admiration in the world reader, there's a 95% chance that you're not the first person to ever have to solve a technical problem. Post on a forum, ask people in your network, speak to a colleague or mentor, hell just pay somebody to talk through the problem with you for an hour. I'll even begrudgingly suggest speaking to one of the infinite GPTs that are popping up.

The Technical Implementation

Step 0 - Oh shit, authentication

The first hurdle we encountered before even beginning to build was that of our user authentication. The conversation of JWTs as an authentication mechanism aside, we used JWTs in order to authenticate a user, which were signed with different keys in each environment. Good on us for not reusing keys, but also... bugger. Our first job was to handle this - security should never be an after thought!

If you face this issue, you have some options to build:

- Make sure you have an attribute to identify which user came from where in the new environment, and build authentication logic to handle each with the old signing key

- Pros: Don't have to refresh everybody's access tokens, nobody risks having a broken auth flow

- Cons: Potentially have to maintain logic for those two regions going forward - how will users created in the new environment behave?

- Create a new shared signing key, wait for your users to refresh using their old tokens and send them back a new version when they do

- Pros: Makes it easy to clean up region specific logic later on, and avoids any potential issues that might arise from keeping that sort of legacy implementation going

- Cons: Have to wait a bit for enough of your users to refresh their tokens that you're comfortable forcing the rest to log back in again manually when the change is made and their token is rejected.

Step 1 - Get Cloudfront in front of everything

My colleagues have taken the piss out of me at least once a month for declaring a fondness for specific AWS services, but I do love Cloudfront. In this instance, it helped in multiple areas of the stack, not necessarily for it's caching, but its use as a proxy.

Before we built anything new, we put Cloudfront in front of our current content serving S3 buckets. This gave us the power to test migrated content early on, as we could just redirect the S3 origin which Cloudfront was serving from, whilst keeping object naming the same.

We also placed Cloudfront distributions in front of our ECS services (or specifically, in front of the associated load balancers), giving the same power of abstraction, as well as setting the groundwork for some easy wins later on around content caching and failover routing.

This all meant that we didn't have to update region-specific hostnames for served content or APIs within our user-facing applications. We could just change the target behind the scenes, and then once redirected, we could update our users configuration from there.

Step 2 - Create our new region

Thanks to our use of infrastructure as code, this bit wasn't too difficult. We copied the Terraform configurations we had been using in our other regions and adapted it for the new one (maybe a couple of lines to change there). The biggest change was a refactor to our Terraform to separate a few bits of the stack, as over time it had grown into a bit of a behemoth, which affected the time it took to terraform apply (and somewhat tightly coupled a lot of our stack).

Step 3 - S3 replication

The first of two off-the-shelf tools that meant that we could shift our data without it getting too laborious. We had been using S3 replication in production beforehand for the obvious case of redundancy (which I highly recommend unless you've got some particularly disturbing S3 buckets). Setting it up to replicate into our new region was therefore pretty easy. Whilst AWS have a general timeline of up to 15 minutes for 99% of files, we found that once replication had been applied it was pretty instantaneous. This may have been because our buckets were comparatively small (1-2 TBs each), or maybe we were just lucky.

Step 4 - AWS DMS

Whilst we were looking into how to shift two databases into a third database, we found AWS Database Migration Service. Whilst I'm sure this isn't a commonly found use case, we did find some content that suggested this was perfectly feasible.

Honestly, this did a lot of the heavy lifting for us. Once you've configured DMS (and honestly the most painful part of that is your normal networking / security groups / likely VPC peering), it'll start picking up data and loading it into a target database pretty easily. Our biggest concern throughout this bit of the project was the potential for key clashing, which would've made this a much more difficult operation. Thankfully we'd been using UUIDs in the DB from day 1.

Once DMS is setup, you can just keep it running and as records are updated / populated in a DB it will forward those through to the target database (it's much more complicated under the hood, and you should definitely do a bit of reading to understand how this works, but it's pretty great). Barring any weird clashes at the moment of transition, we knew that every user would have any updates they made up until the last second replicated to the new database.

With all of our data being copied over in pretty much real time, we were ready to...

Step 5 - Test and release!

As we were building this, we knew we wanted to be able to test it in isolation before having our changes affect our users. To this end, we span up the new environment, and had it running for about 2 weeks before we did the cutover. It was definitely a delightful moment to connect to the 'hidden' new region and see all of our users, content and all. During this time, we tried to simulate usage as best as possible - using VPNs to emulate traffic coming from the US to this new region to get an understanding of latency and how this change would effect performance for users.

After a couple of weeks, during time that we knew would be very low traffic, we switched our Cloudfront distribution origins to use the bucket and load balancers from the new region. After frantically refreshing for a minute waiting for an error or to notice an issue, we took a look through our observability tools. Quietly, and without issue, we had shifted our workloads to the new environment.

Learnings

Recognise opportunities, but keep to the task at hand

As painful as it was, this was a great opportunity to 'leave the campsite better than how we found it'. There were a lot of old configurations, or things we'd been hesitant to shift given how little resource we had. We made decisions that gave us a bit more confidence over our infrastructure, but in order to stop the task growing too big (and therefore becoming slower, or more dangerous to implement) we had to hold ourselves from trying to implement a 'perfect' solution. This applies to any day to day development, but I needed reminding a couple of times.

Figure out how to keep an inventory, and stick to it

We forgot our analytics team! For a week or so after as we monitored everything, we realised that our analytics team's tools had all broken as the data sources they were relying on had all jumped ship. This wasn't going to end us by any means, and was a blip in the grand scheme of things, but there's probably something we could've done to avoid this problem. Maybe it was better documentation about what's connected to what, or maybe I should've just spoken to them before implementation.

Thanks for reading

I hope this gave some insight into how a migration across regions can work, how to make the work a bit easier for yourself, and some of the traps you can fall into. If you're planning or have completed a similar move I'd love to hear about different experiences!